OpenPTrack is an open source project launched in 2013 to create a scalable, multi-camera solution for person tracking.

It enables many people to be tracked over large areas in real time.

It is designed for applications in education, arts and culture, as a starting point for exploring group interaction with digital environments.

How OpenPTrack Works…

(Press play to watch videos. Click on any still for a larger view.)

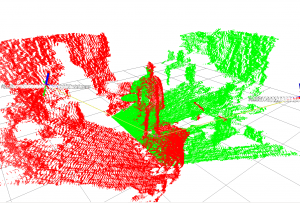

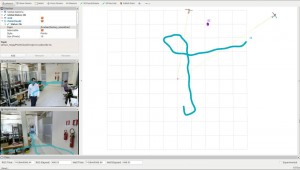

OpenPTrack in action looks like this:

OpenPTrack uses a network of imagers to track the moving centroids (centers of mass) of people within a defined area.

It supports different types of 3D imagers to provide RGB + Depth data. For example, an OpenPTrack system can include the following:

- Microsoft Kinect for Xbox 360 (v1)

- Microsoft Kinect for Xbox One (v2)

- Mesa SwissRanger SR4500

- Stereo PointGrey Blackfly PoE GigE and USB 3.0 Cameras

To perform the task of detecting people in the sensor stream, each imager in the network must be connected to a PC running Ubuntu Linux, and each PC connected to a local Gigabit Ethernet network. People detections obtained from every sensor are sent to a master process on one of those machines that merges them into a global tracking output.

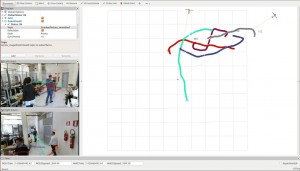

This tracking data is delivered at 30 frames per second as a simple JSON-formatted stream via UDP, which can be incorporated easily into creative coding tools, such as Max/MSP, Touchdesigner, and Processing.

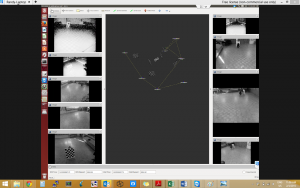

The techniques of OpenPTrack exploit both intensity and depth data to obtain robust people detection at distances of up to 10 meters, and work in cluttered indoor environments, regardless of lighting conditions or sensors’ heights. (At this time, OpenPTrack assumes that all people are walking on the same ground plane.)

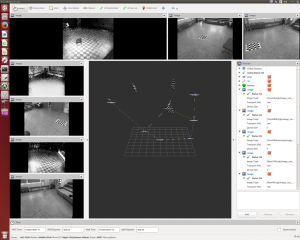

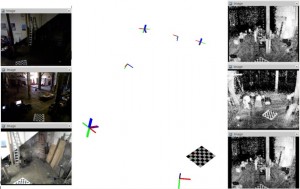

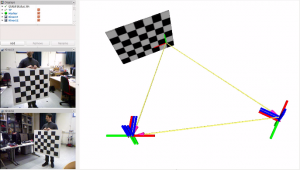

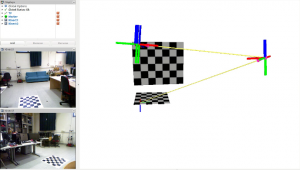

OpenPTrack uses multiple sensors in order to extend the field of view and range that would be obtained by just a single sensor. The multiple sensors also make tracking more robust against occlusions. To merge detections from the multiple sensors, the tracking node needs to know the pose of the cameras with respect to a common reference frame.

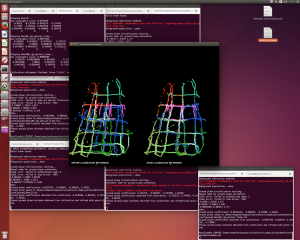

This is accomplished with a simple calibration procedure of moving a checkerboard in front of the sensors. In a few seconds, after showing the checkerboard to the sensor(s), the pose of each sensor is automatically computed, and real-time feedback about the calibration process is shown on screen. Then, at the end of the calibration routine, the ground plane position is precisely estimated by placing the checkerboard on the floor.

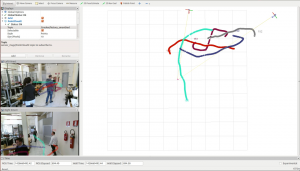

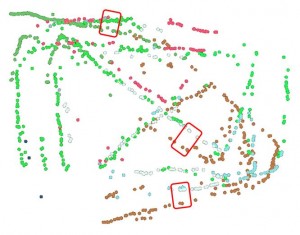

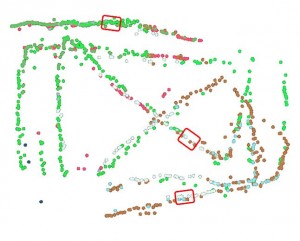

In order to improve calibration in difficult conditions (e.g., when sensors are very far from each other or have low resolution), there is an additional (optional) calibration refinement procedure. This procedure simply requires a person to walk throughout the entire tracking workspace. The detection trajectories obtained from each sensor are then aligned to each other, and camera poses are corrected according to this alignment.

Once calibration has been completed, people tracking can be performed from the master node, fusing together the detection being done locally from all the individual processing nodes. The output of tracking can be inspected with a 3D visualizer containing the camera poses and the people trajectories. A unique ID and a different color are assigned to each track. Sent as a JSON stream via UDP, for every track,in addition to the ID number, UDP messages contain:

- 3D position of the centroid

- height from the ground plane

- duration (age)

- tracking confidence

OpenPTrack imposes no limit on the number of people being tracked in the tracking area, nor a limit to the tracking area’s dimensions.

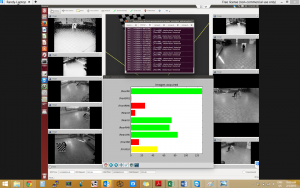

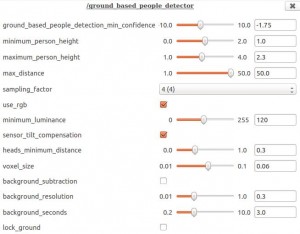

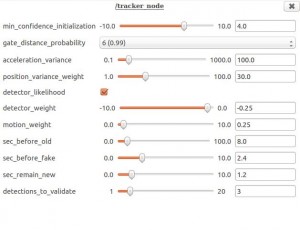

OpenPTrack also allows for changes to all parameters of the algorithms performing real-time people detection and tracking, while the system is running. A dedicated GUI allows this to be done for every node of the network. When the background of the tracking workspace is static, the background subtraction option can be used to improve the rejection of false tracks. This option is also easily enabled while running, from the people detection GUI.

If you would like even more details, check out this paper:

—M. Munaro, A. Horn, R. Illum, J. Burke and R. B. Rusu. OpenPTrack: People Tracking for Heterogeneous Networks of Color-Depth Cameras. In IAS-13 Workshop Proceedings: 1st Intl. Workshop on 3D Robot Perception with Point Cloud Library, pp. 235-247, Padova, Italy, 2014.