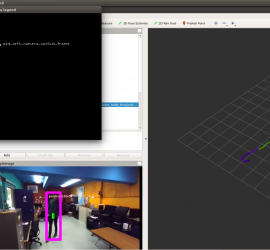

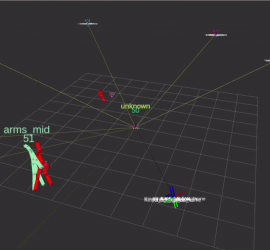

RealSense & Zed Support Added in OPT V2.2

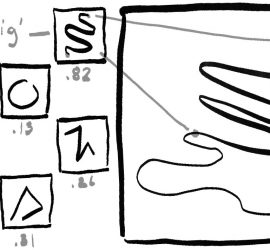

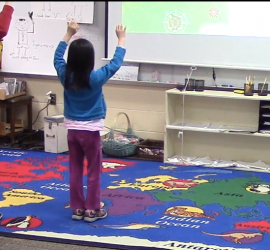

Support for the Stereolabs Zed and Intel RealSense imagers have been added in V2.2 of OpenPTrack, as well as preliminary support (people tracking only) for the Microsoft Kinect Azure. The Kinect V2 continues to be supported as well. V2.2 also includes updates to the underlying software stack, to Ubuntu 18.04 […]